Machine Learning: Lesson 1

For the first series of tutorials, I learned what it means to use Supervised Learning. Supervised learning tries to answer the question How does the input relate to the output?. Essentially, supervised learning is a strategy for creating a function that can provide accurate outputs based on former experience (e.g. training sets of data).

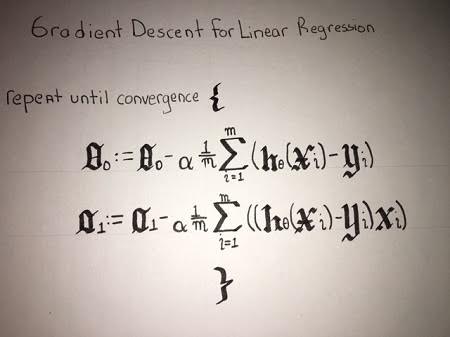

Gradient Descent

Gradient Descent is a first order iterative algorithm used to find the local minimum. It is a method for minimizing the cost function to arrive at the local minimum

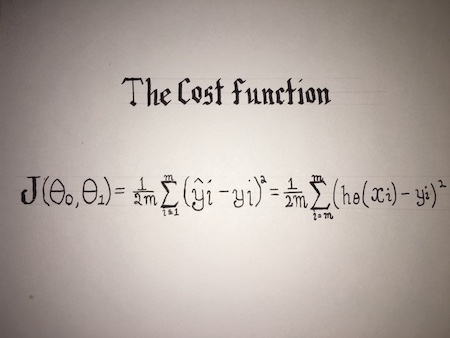

Cost Function

The cost function is used to measure the accuracy of an hypothesis, using the results of the hypothesis. The cost function is a function of theta one.

The cost function is the sum of all squared height values for each point on the x-axis